How to block bad spiders from wasting bandwidth

Search engines use technology known as spiders to search the web. A spider is an agent (also called a bot - short for robot) that will connect to your website and download a copy of all of your pages (or try to) in order to populate the search engine it is working for. However, spiders are supposed to obey certain rules (defined in your robots.txt file), and they are definitely not supposed to thrash your website to the point that is causes a denial of service, or uses up all of your bandwidth.

By adding special instructions to a file called .htaccess (the full stop in front of it is intentional) you can instruct your web server to deny requests from specific spiders.

Thankfully most modern Content Management Systems (CMS) have a plug-in or extension to handle blocking these rogue bots automatically. They do this by creating a hidden link on your site - and telling robots not to follow it. Those that obey the do not follow rule and ignore the link are allowed to continue to crawl your site. However, those that ignore the request and follow the link get automatically blacklisted.

We've listed a few options for the most common CMS' - WordPress, Drupal and Joomla. Below those we also include details of how identify offenders and add these rules manually.

WordPress - Blackhole for Bad Bots - external link opens in a new window.

Drupal - Spider Slap - external link opens in a new window.

Joomla - Bad Bot Protection - external link opens in a new window.

The manual approach

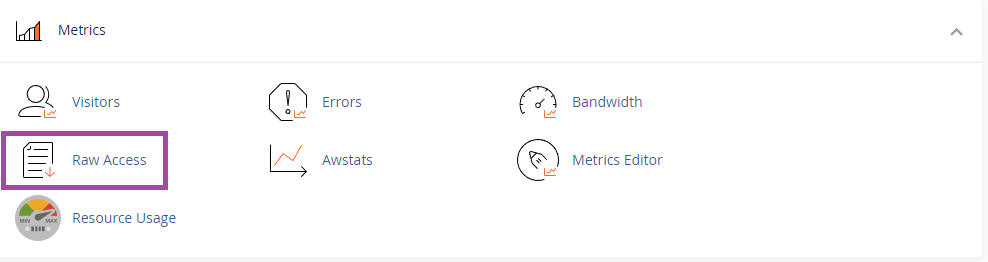

In order to find the detail required to complete these steps (IP address of the spiders or identifying User-Agent) you'll need to download you Access Logs from within cPanel.

You can download the logs that have been collected so far today, by clicking on the domain in question. You can also download archived log files for previous days. Once you have downloaded, and uncompressed the .gz file you will have to load the file up in a text editor and do some detective work. Some people use Excel or OpenOffice or other spreadsheet software to parse the fields in the file. However, this is an advanced article so we're going to assume you know how to do that!

Solution 1 - ban by IP address

Each line in the access log file will look like this.

180.76.5.14 - - [22/Jul/2013:20:07:48 +0100] "GET /special-events/action:month/cat_ids:9/tag_ids:37,26/ HTTP/1.0" 500 7309 "-" "Mozilla/5.0 (compatible; Baiduspider/2.0; +http://www.baidu.com/search/spider.html)"

At the very beginning of this line we can see that the IP of the spider request is 180.76.5.14

To block this IP

If a file does not already exist at public_html/.htaccess you can create an empty one using cPanel File Manager.

Add this to the top of the file, replacing x.x.x.x with the IP address of the bad spider bot.

order allow,deny

allow from all

deny from x.x.x.x

Very often bots use a range of IP addresses. For example Baiduspider, a Chinese spider which causes many of our customers to experience problems, appears to use a range of addresses from 180.76.5.0 to 180.76.6.254 to spider sites in the UK. In order to completely block this range, you can add:

order allow,deny

allow from all

deny from 180.76.5.0/24

deny from 180.76.6.0/24

If you only want to apply these rules to a particular directory path within your website, then you can add

<LocationMatch "^/+documents/+notforbots">

order allow,deny

allow from all

deny from 1.2.3.4

</Location>

This would block 1.2.3.4 from being able to access https://yourwebsite.com/documents/notforbots

You can read more about the apache 2.2 mod_access directives here - external link opens in a new window.

Solution 2 - ban by User Agent

If you know how the spider is identifying itself then you can block requests on the basis of the User-Agent HTTP request header.

Using the same example as solution 1:

180.76.5.14 - - [22/Jul/2013:20:07:48 +0100] "GET /special-events/action:month/cat_ids:9/tag_ids:37,26/ HTTP/1.0" 500 7309 "-" "Mozilla/5.0 (compatible; Baiduspider/2.0; +http://www.baidu.com/search/spider.html)"

The last quote delimited string is the User-Agent header:

"Mozilla/5.0 (compatible; Baiduspider/2.0; +http://www.baidu.com/search/spider.html)"

The bit we are interested in is Baiduspider/2.0.

We're not really interested in which version of Baiduspider is hitting us, so we're just going to block everything that matches Baiduspider in the User-Agent header. To do this, we would add this to the top of our .htaccess file

BrowserMatchNoCase baiduspider banned

Deny from env=banned

This would block all requests from the Baiduspider bot, as long as it issued it's tell tale User-Agent header.

You can read more about the apache mod_setenvif module directives here - external link opens in a new window.